The Replicant Labs series pulls back the curtain on the tech, tools, and people behind the Thinking Machine. From double-clicks into the latest technical breakthroughs like Large Language Models to first-hand stories from our subject matter experts, Replicant Labs provides a deeper look into the work and people that make our customers better every day.

Meghna Suresh, Head of Product, Replicant

Replicant’s Large Language Model layer delivers more resolutions, faster time-to-value and strengthened security for contact centers.

With the latest neural network advancements, our LLM layer ushers in the next evolution of natural, automated customer service. And callers don’t need to change a thing to reap the benefits.

In our first live flow with the LLM layer – an intake flow collecting car details during emergency roadside service calls – the Thinking Machine achieved a soaring 90% resolution rate right out of the gate.

But it isn’t magic. Under the hood, we’ve taken a calculated approach to leverage the immense potential of LLMs like ChatGPT, while safeguarding contact centers from their risks.

Replicant knows that enterprise-level challenges require enterprise-grade solutions. The new features made possible by our LLM layer extend the Thinking Machine’s ability to improve customer experiences while protecting businesses from vulnerabilities.

Here’s a deeper look at what’s new:

Resolve More Customer Issues

Contact centers rely on the Thinking Machine for its best-in-class resolution rates and intent recognition accuracy. With LLMs, it can now better navigate common obstacles in a customer service conversation.

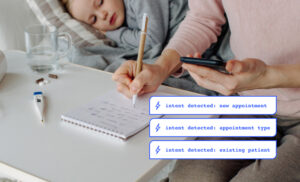

1-Turn Problem Capture. Replicant’s LLM layer captures multiple intents and entities at once, then drives action off of it. A Thinking Machine being used to schedule healthcare appointments, for example, can simply ask a caller “How can I help you today?” The customer can respond with a natural sentence like, “I’d like to schedule a follow-up for my three-year-old next week,” and the Thinking Machine can quickly detect multiple intents, like appointment type and patient type, driving faster resolutions.

Logical Reasoning. LLMs improve the deductive abilities of the Thinking Machine without requiring explicit training to do so. In the above example, the Thinking Machine implicitly understands that “for my three-year-old” means the appointment type is pediatric, and that a follow-up appointment signifies an existing patient, which can be verified against a CRM with the caller’s phone number or other authentication steps.

Contextual Disambiguation. Customers often say things that are somewhat ambiguous. Older NLU models struggled to determine what action to take during these instances. For example, a customer trying to decide on an appointment time might say, “Actually, Tuesday morning might not work if my first meeting runs long.” Our LLM layer enables the Thinking Machine to be fully aware of the nuance in a customer’s intent, and immediately follow up with more appointment options that might work better.

Dynamic Conversation Repair. After the Thinking Machine takes an action like scheduling an appointment, customers may still change their mind later in the conversation. They may remember they need to add a service, update a credit card, or even schedule another appointment. LLMs ensure seamless repair in conversation design, allowing the Thinking Machine to update a request on the fly without asking the customer to start over.

First-party Database Matching. LLMs have a vast knowledge of the world, but not of your business. Replicant’s LLM layer augments LLMs with your databases to match customers’ natural prompts to the context of your business. For example, if a customer booking a hotel room says “I want to stay in the city,” the Thinking Machine can reason that they’d prefer the downtown location for their stay rather than the airport location.

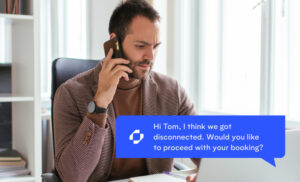

Intelligent Reconnect. Replicant’s LLM layer keeps structured records of each conversation turn, which allows the Thinking Machine to know in realtime what’s previously happened in a conversation and what needs to happen next. When calls get disconnected, the LLM dialog engine can follow an intelligent reconnect workflow to pick up right where the customer left off when they call back.

Complex Flows. Replicant’s LLM layer allows the Thinking Machine to handle higher levels of complexity. It can parse different SKUs, modifications to a request and changes to order quantities, allowing customers to make even more in-depth requests. With the LLM layer, contact centers can think beyond the confines of simple use cases like FAQs to resolve more call drivers and scale automation even further.

Faster Time-to-Value

Contact centers can deploy a Thinking Machine in weeks to navigate the unpredictable world of customer service even faster, while keeping project timelines within scope.

Few Shot Learning. Our LLM layer allows us to significantly reduce the development time required to customize a Thinking Machine around specific businesses and use cases by accelerating key design stages. Now, a solution can be optimized and deployed with fewer training cycles and minimal updating and retraining phases to achieve the best possible resolution rate in just weeks.

Safeguards and Control

Replicant’s LLM layer combines the power of the technologies like ChatGPT with the experience and security of our enterprise-grade Contact Center Automation platform.

Dialog Policy Control. LLMs don’t just work out of the box. On their own, they are susceptible to inaccurate, inappropriate or offensive responses that make them unfit to be connected directly with customers. Our dialog policy validates every piece of data we get back against a coded set of rules that govern what the LLM can say and do in our platform, preventing inappropriate or offensive responses.

Prompt Engineering. Replicant’s platform follows prescribed workflows and scripts, just as agents do. Our team has experience designing 10M+ conversations over six years and has created best practices to prompt and rigorously evaluate LLMs. Our workflow abstracts away the complexity of LLMs, making prompt-based conversation building highly accessible and reliable.

Always Secure. Our architecture safeguards customers’ sensitive data and protects against security vulnerabilities like prompt injection. This means, first and foremost, that customers’ PII is never passed on to third-party LLMs. In addition, our LLM layer is never susceptible to being “tricked” by user prompts that might otherwise lead an LLM to give an inappropriate response. Thinking Machine responses are always designed and pre-configured to be 100% predictable for each business and use case.

AI holds the ability to bring a wealth of promise – and potential peril – to your contact center. Come learn why contact center leaders continue to choose Replicant as their trusted partner for Contact Center Automation.

First Notice of Loss

First Notice of Loss